MiniCPM - MiniCPM-2B: An end-side LLM outperforms Llama2-13B.

Created at: 2024-01-29 16:21:15

Language: Python

License: Apache-2.0

MiniCPM: 揭示端侧大语言模型的无限潜力

中文 | English

MiniCPM 技术博客 | OmniLMM 多模态模型 | CPM-C 千亿模型试用 | 加入我们的 discord 和 wechat

MiniCPM 是面壁智能与清华大学自然语言处理实验室共同开源的系列端侧大模型,主体语言模型 MiniCPM-2B 仅有 24亿(2.4B)的非词嵌入参数量。

- 经过 SFT 后,MiniCPM 在公开综合性评测集上,MiniCPM 与 Mistral-7B相近(中文、数学、代码能力更优),整体性能超越 Llama2-13B、MPT-30B、Falcon-40B 等模型。

- 经过 DPO 后,MiniCPM 在当前最接近用户体感的评测集 MTBench上,MiniCPM-2B 也超越了 Llama2-70B-Chat、Vicuna-33B、Mistral-7B-Instruct-v0.1、Zephyr-7B-alpha 等众多代表性开源大模型。

- 以 MiniCPM-2B 为基础构建端侧多模态大模型 MiniCPM-V,整体性能在同规模模型中实现最佳,超越基于 Phi-2 构建的现有多模态大模型,在部分评测集上达到与 9.6B Qwen-VL-Chat 相当甚至更好的性能。

- 经过 Int4 量化后,MiniCPM 可在手机上进行部署推理,流式输出速度略高于人类说话速度。MiniCPM-V 也直接跑通了多模态大模型在手机上的部署。

- 一张1080/2080可高效参数微调,一张3090/4090可全参数微调,一台机器可持续训练 MiniCPM,二次开发成本较低。

我们完全开源MiniCPM-2B的模型参数供学术研究和有限商用,在未来我们还将发布训练过程中的所有Checkpoint和大部分非专有数据供模型机理研究。 具体而言,我们目前已公开以下模型,地址详见 模型下载 部分

- 基于MiniCPM-2B的指令微调与人类偏好对MiniCPM-2B-SFT/DPO。

- 基于MiniCPM-2B的多模态模型MiniCPM-V,能力超越基于Phi-2的同参数级别多模态模型。

- MiniCPM-2B-SFT/DPO的Int4量化版MiniCPM-2B-SFT/DPO-Int4。

- 基于MLC-LLM、LLMFarm开发的MiniCPM手机端程序,文本及多模态模型均可在手机端进行推理。

局限性:

- 受限于模型规模,模型可能出现幻觉性问题。其中由于DPO模型生成的回复内容更长,更容易出现幻觉。我们也将持续进行MiniCPM模型的迭代改进。

- 为了保证在学术研究用途上模型的通用性,我们未对模型进行任何身份认同训练。同时由于我们用ShareGPT开源语料作为部分训练数据,模型可能会输出类似GPT系列模型的身份认同信息。

- 受限于模型规模,模型的输出受到提示词(prompt)的影响较大,可能多次尝试产生不一致的结果。

- 受限于模型容量,模型的知识记忆较不准确,后续我们将结合RAG方法来增强模型的知识记忆能力。

目录

模型下载

-

语言模型

-

多模态模型

HuggingFace ModelScope WiseModel MiniCPM-V MiniCPM-V MiniCPM-V OmniLMM OmniLMM OmniLMM

快速上手

在线体验

vLLM 推理

-

安装支持 MiniCPM 的 vLLM

- 因为 MiniCPM 采用 MUP 结构,在矩阵乘法中存在一定的放缩计算,与Llama类模型结构有细微差别。

- 我们基于版本为 0.2.2 的 vLLM 实现了 MiniCPM 的推理,代码位于仓库inference文件夹下,未来将会支持更新的vLLM 版本。

-

安装支持 MiniCPM 的 vLLM 版本

pip install inference/vllm- 将Huggingface Transformers仓库转为vLLM-MiniCPM支持的格式,其中

<hf_repo_path>,<vllmcpm_repo_path>均为本地路径

python inference/convert_hf_to_vllmcpm.py --load <hf_repo_path> --save <vllmcpm_repo_path>- 测试样例

cd inference/vllm/examples/infer_cpm

python inference.py --model_path <vllmcpm_repo_path> --prompt_path prompts/prompt_demo.txt- 期望输出

<用户>: Which city is the capital of China?

<AI>:

The capital city of China is Beijing. Beijing is a major political, cultural, and economic center in China, and it is known for its rich history, beautiful architecture, and vibrant nightlife. It is also home to many of China's most important cultural and historical sites, including the Forbidden City, the Great Wall of China, and the Temple of Heaven. Beijing is a popular destination for tourists from around the world, and it is an important hub for international business and trade.Huggingface 模型

MiniCPM-2B

- 安装

transformers>=4.36.0以及accelerate后,运行以下代码

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

torch.manual_seed(0)

path = 'openbmb/MiniCPM-2B-dpo-bf16'

tokenizer = AutoTokenizer.from_pretrained(path)

model = AutoModelForCausalLM.from_pretrained(path, torch_dtype=torch.bfloat16, device_map='cuda', trust_remote_code=True)

responds, history = model.chat(tokenizer, "山东省最高的山是哪座山, 它比黄山高还是矮?差距多少?", temperature=0.5, top_p=0.8, repetition_penalty=1.02)

print(responds)- 期望输出

山东省最高的山是泰山,海拔1545米。

相对于黄山(海拔1864米),泰山海拔较低,相差约319米。MiniCPM-V

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained('openbmb/MiniCPM-V/', trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V', trust_remote_code=True)

model.eval().cuda()

image = Image.open('xx.jpg').convert('RGB')

question = 'What is in the image?'

msgs = [{'role': 'user', 'content': question}]

res, context, _ = model.chat(

image=image,

msgs=msgs,

context=None,

tokenizer=tokenizer,

sampling=True,

temperature=0.7

)

print(res)评测结果

评测设置

- 由于大模型评测难以统一,且大量评测也没有公开的prompt和测试代码,对于具体评测方式,我们只能尽量做到适合各类模型。

- 整体而言,我们测试时采用统一的prompt输入,并按照各模型对应的模板进行输入调整。

-

评测脚本及prompt已开源在我们的Github仓库中,也欢迎更多开发者来不断改进我们的评测方式。

- 文本评测部分,采用了我们的开源大模型能力评测框架UltraEval。以下为开源模型复现流程:

- 安装UltraEval

git clone https://github.com/OpenBMB/UltraEval.git cd UltraEval pip install -e .

- 下载相关数据并解压处理

wget -O RawData.zip "https://cloud.tsinghua.edu.cn/f/71b5232264ae4833a4d0/?dl=1" unzip RawData.zip python data_process.py - 执行评测脚本(提供了模板,可自定义)

bash run_eval.sh

- 安装UltraEval

- 文本评测部分,采用了我们的开源大模型能力评测框架UltraEval。以下为开源模型复现流程:

部署模式

- 因为MiniCPM采用Mup的结构,与现有模型在具体计算上有细微差别,我们是基于vllm=0.2.2版本进行了我们模型的实现。

- 对于非MiniCPM模型,我们采用了vllm=0.2.7的最新版本进行推理。

评测度量

- 对于QA任务(选择题任务),我们选用两种方式进行测试:

- PPL:将选项作为题目生成的延续,并根据各个选项的PPL来进行答案选择;

- 第二种是直接生成答案选项。

- 对于不同模型,这两种方式得到的结果差异较大。MiniCPM两种模式上的结果较为接近,而Mistral-7B-v0.1等模型在PPL上表现较好,直接生成上效果较差。

- 在具体评测时,我们以两种评测方式得分的最高者为最终结果,以此保证对比的公平性(以下表格中*号表示采用PPL)。

文本模型评测

越级比较:

| 模型 | 平均分 | 英文均分 | 中文均分 | C-Eval | CMMLU | MMLU | HumanEval | MBPP | GSM8K | MATH | BBH | ARC-E | ARC-C | HellaSwag |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Llama2-7B | 35.40 | 36.21 | 31.765 | 32.42 | 31.11 | 44.32 | 12.2 | 27.17 | 13.57 | 1.8 | 33.23 | 75.25 | 42.75 | 75.62* |

| Qwen-7B | 49.46 | 47.19 | 59.655 | 58.96 | 60.35 | 57.65 | 17.07 | 42.15 | 41.24 | 5.34 | 37.75 | 83.42 | 64.76 | 75.32* |

| Deepseek-7B | 39.96 | 39.15 | 43.635 | 42.82 | 44.45 | 47.82 | 20.12 | 41.45 | 15.85 | 1.53 | 33.38 | 74.58* | 42.15* | 75.45* |

| Mistral-7B | 48.97 | 49.96 | 44.54 | 46.12 | 42.96 | 62.69 | 27.44 | 45.2 | 33.13 | 5.0 | 41.06 | 83.92 | 70.73 | 80.43* |

| Llama2-13B | 41.48 | 42.44 | 37.19 | 37.32 | 37.06 | 54.71 | 17.07 | 32.55 | 21.15 | 2.25 | 37.92 | 78.87* | 58.19 | 79.23* |

| MPT-30B | 38.17 | 39.82 | 30.715 | 29.34 | 32.09 | 46.56 | 21.95 | 35.36 | 10.31 | 1.56 | 38.22 | 78.66* | 46.08* | 79.72* |

| Falcon-40B | 43.62 | 44.21 | 40.93 | 40.29 | 41.57 | 53.53 | 24.39 | 36.53 | 22.44 | 1.92 | 36.24 | 81.94* | 57.68 | 83.26* |

| MiniCPM-2B | 52.33 | 52.6 | 51.1 | 51.13 | 51.07 | 53.46 | 50.00 | 47.31 | 53.83 | 10.24 | 36.87 | 85.44 | 68.00 | 68.25 |

同级比较:

| 模型 | 平均分 | 英文均分 | 中文均分 | C-Eval | CMMLU | MMLU | HumanEval | MBPP | GSM8K | MATH | BBH | ARC-E | ARC-C | HellaSwag |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TinyLlama-1.1B | 25.36 | 25.55 | 24.525 | 25.02 | 24.03 | 24.3 | 6.71 | 19.91 | 2.27 | 0.74 | 28.78 | 60.77* | 28.15* | 58.33* |

| Qwen-1.8B | 34.72 | 31.87 | 47.565 | 49.81 | 45.32 | 43.37 | 7.93 | 17.8 | 19.26 | 2.42 | 29.07 | 63.97* | 43.69 | 59.28* |

| Gemini Nano-3B | - | - | - | - | - | - | - | 27.2(report) | 22.8(report) | - | 42.4(report) | - | - | - |

| StableLM-Zephyr-3B | 43.46 | 46.31 | 30.615 | 30.34 | 30.89 | 45.9 | 35.37 | 31.85 | 52.54 | 12.49 | 37.68 | 73.78 | 55.38 | 71.87* |

| Phi-2-2B | 48.84 | 54.41 | 23.775 | 23.37 | 24.18 | 52.66 | 47.56 | 55.04 | 57.16 | 3.5 | 43.39 | 86.11 | 71.25 | 73.07* |

| MiniCPM-2B | 52.33 | 52.6 | 51.1 | 51.13 | 51.07 | 53.46 | 50.00 | 47.31 | 53.83 | 10.24 | 36.87 | 85.44 | 68.00 | 68.25 |

Chat模型比较:

| 模型 | 平均分 | 英文均分 | 中文均分 | C-Eval | CMMLU | MMLU | HumanEval | MBPP | GSM8K | MATH | BBH | ARC-E | ARC-C | HellaSwag |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ChatGLM2-6B | 37.98 | 35.17 | 50.63 | 52.05 | 49.21 | 45.77 | 10.37 | 9.38 | 22.74 | 5.96 | 32.6 | 74.45 | 56.82 | 58.48* |

| Mistral-7B-Instruct-v0.1 | 44.36 | 45.89 | 37.51 | 38.06 | 36.96 | 53.56 | 29.27 | 39.34 | 28.73 | 3.48 | 39.52 | 81.61 | 63.99 | 73.47* |

| Mistral-7B-Instruct-v0.2 | 50.91 | 52.83 | 42.235 | 42.55 | 41.92 | 60.51 | 36.59 | 48.95 | 40.49 | 4.95 | 39.81 | 86.28 | 73.38 | 84.55* |

| Qwen-7B-Chat | 44.93 | 42.05 | 57.9 | 58.57 | 57.23 | 56.03 | 15.85 | 40.52 | 42.23 | 8.3 | 37.34 | 64.44* | 39.25* | 74.52* |

| Yi-6B-Chat | 50.46 | 45.89 | 70.995 | 70.88 | 71.11 | 62.95 | 14.02 | 28.34 | 36.54 | 3.88 | 37.43 | 84.89 | 70.39 | 74.6* |

| Baichuan2-7B-Chat | 44.68 | 42.74 | 53.39 | 53.28 | 53.5 | 53 | 21.34 | 32.32 | 25.25 | 6.32 | 37.46 | 79.63 | 60.15 | 69.23* |

| Deepseek-7B-chat | 49.34 | 49.56 | 48.335 | 46.95 | 49.72 | 51.67 | 40.85 | 48.48 | 48.52 | 4.26 | 35.7 | 76.85 | 63.05 | 76.68* |

| Llama2-7B-Chat | 38.16 | 39.17 | 33.59 | 34.54 | 32.64 | 47.64 | 14.02 | 27.4 | 21.15 | 2.08 | 35.54 | 74.28 | 54.78 | 75.65* |

| MiniCPM-2B | 52.33 | 52.6 | 51.1 | 51.13 | 51.07 | 53.46 | 50.00 | 47.31 | 53.83 | 10.24 | 36.87 | 85.44 | 68.00 | 68.25 |

DPO后模型比较:

| 模型 | MT-bench |

|---|---|

| GPT-4-turbo | 9.32 |

| GPT-3.5-turbo | 8.39 |

| Mistral-8*7b-Instruct-v0.1 | 8.30 |

| Claude-2.1 | 8.18 |

| Zephyr-7B-beta | 7.34 |

| MiniCPM-2B | 7.25 |

| Vicuna-33B | 7.12 |

| Zephyr-7B-alpha | 6.88 |

| LLaMA-2-70B-chat | 6.86 |

| Mistral-7B-Instruct-v0.1 | 6.84 |

| MPT-34B-instruct | 6.39 |

多模态模型评测

| Model | Size | MME | MMB dev (en) | MMB dev (zh) | MMMU val | CMMMU val |

|---|---|---|---|---|---|---|

| LLaVA-Phi | 3B | 1335 | 59.8 | - | - | - |

| MobileVLM | 3B | 1289 | 59.6 | - | - | - |

| Imp-v1 | 3B | 1434 | 66.5 | - | - | - |

| Qwen-VL-Chat | 9.6B | 1487 | 60.6 | 56.7 | 35.9 | 30.7 |

| CogVLM | 17.4B | 1438 | 63.7 | 53.8 | 32.1 | - |

| MiniCPM-V(3B) | 3B | 1452 | 67.3 | 61.9 | 34.7 | 32.1 |

手机部署

部署步骤

- 进行Int4量化后,MiniCPM只占2GB空间,具备在端侧手机进行模型部署的条件。

- 对于不同的操作系统,我们进行了不同的适配。

- 注意:当前开源框架对手机支持还在完善,并非所有芯片与操作系统版本均能成功运行MLC-LLM或LLMFarm。

- Android、Harmony

- 使用开源框架MLC-LLM进行模型适配。

- 支持文本模型、多模态模型。

- 适用于MiniCPM-2B-SFT-INT4、MiniCPM-2B-DPO-INT4、MiniCPM-V。

- 编译安装MiniCPM指南

- iOS

- 使用开源框架LLMFarm进行模型适配。

- 支持文本模型。

- 适用于MiniCPM-2B-SFT-INT4、MiniCPM-2B-DPO-INT4。

- 编译安装MiniCPM指南

部署性能

- 我们未针对手机推理模型进行深度优化和系统测试,仅验证MiniCPM使用手机芯片进行推理的可行性。

- 此前尚未有工作尝试在手机上部署多模态大模型。我们此次在MLC-LLM上验证了手机部署MiniCPM-V的可行性,能够正常输入输出,但也存在图片处理时间较长的问题,需要进一步优化 :)。

- 我们也欢迎更多开发者进一步调优并更新下面的测试列表,不断提升端侧大模型在手机上的推理性能。

Demo & API 部署

基于Gradio的网页版Demo

- 使用如下命令启动基于Gradio的网页版demo:

# generation powered by vllm

python demo/vllm_based_demo.py --model_path <vllmcpm_repo_path>

# generation powered by huggingface

python demo/hf_based_demo.py --model_path <hf_repo_path>二次开发

-

高效参数微调

- 一张1080/2080可实现高效参数微调

- 高效参数微调代码

-

全参数微调 or 持续训练

- 使用BMTrain,借助重计算和ZeRO-3,一张3090/4090可实现全参数微调,一台机器可实现持续训练

- 相关代码也将陆续推出

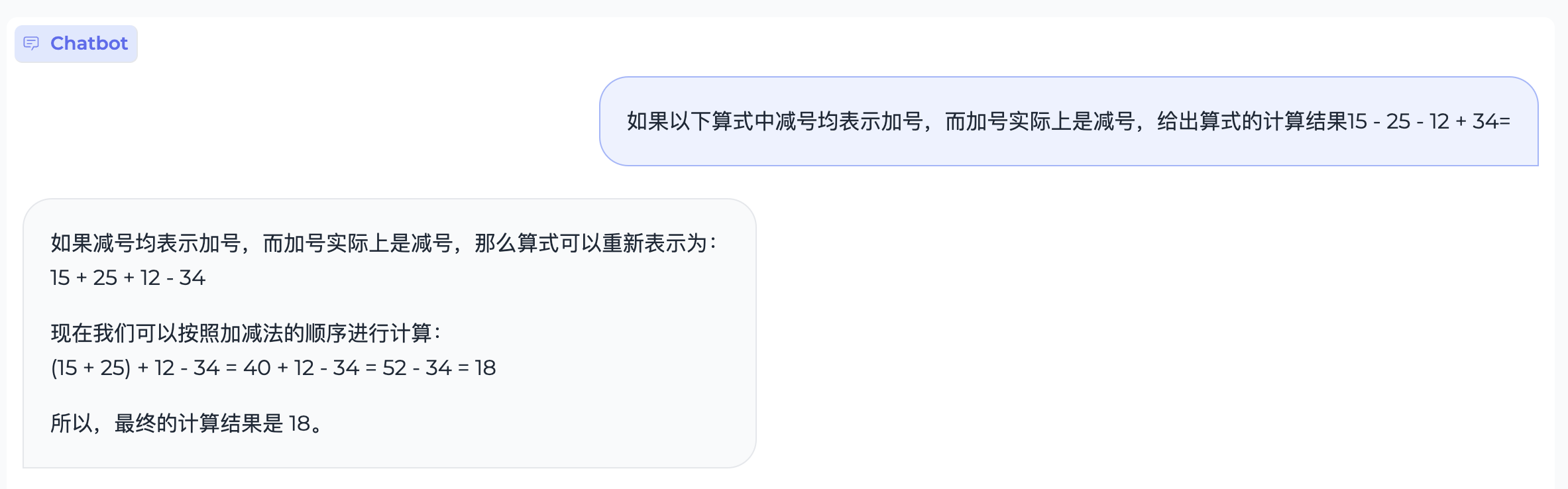

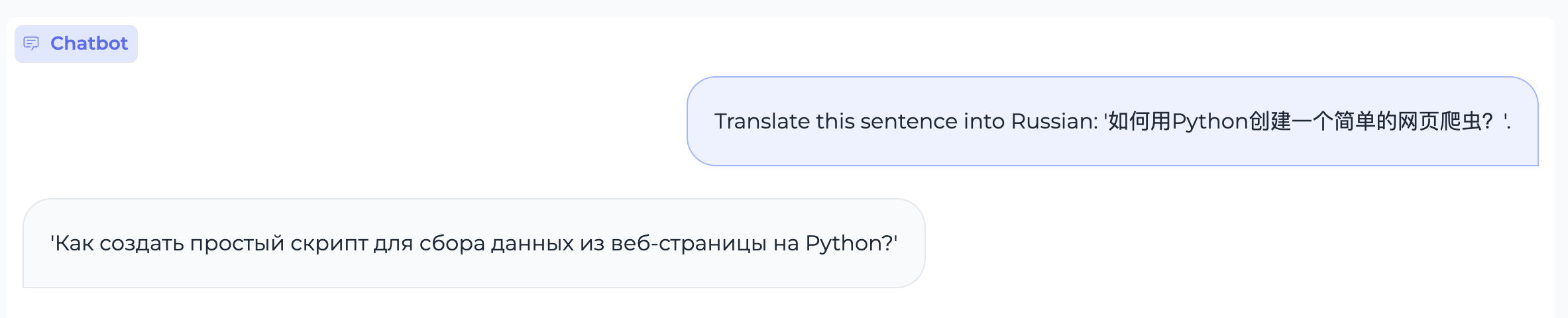

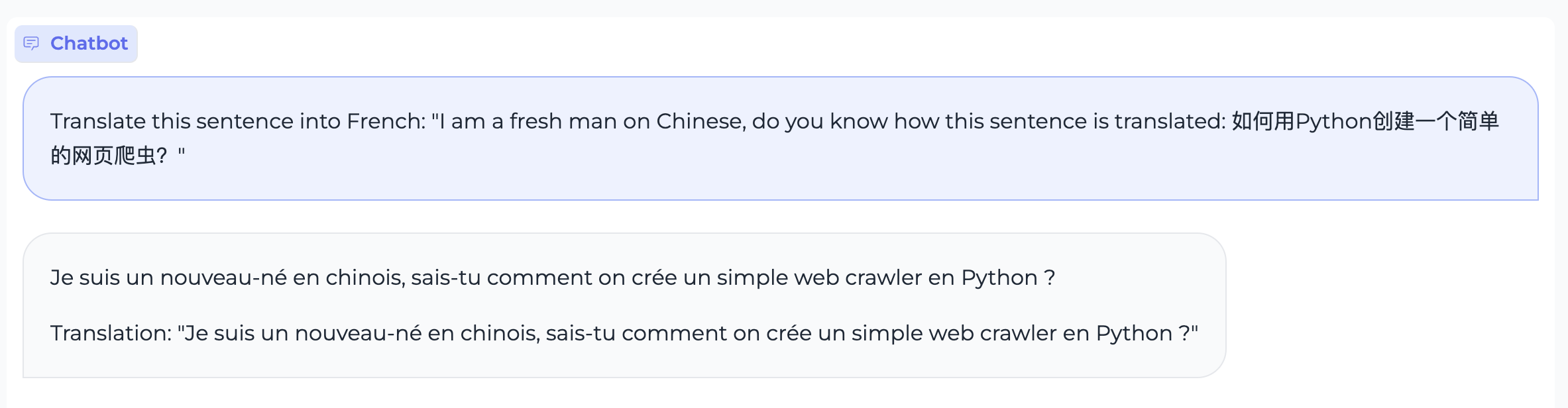

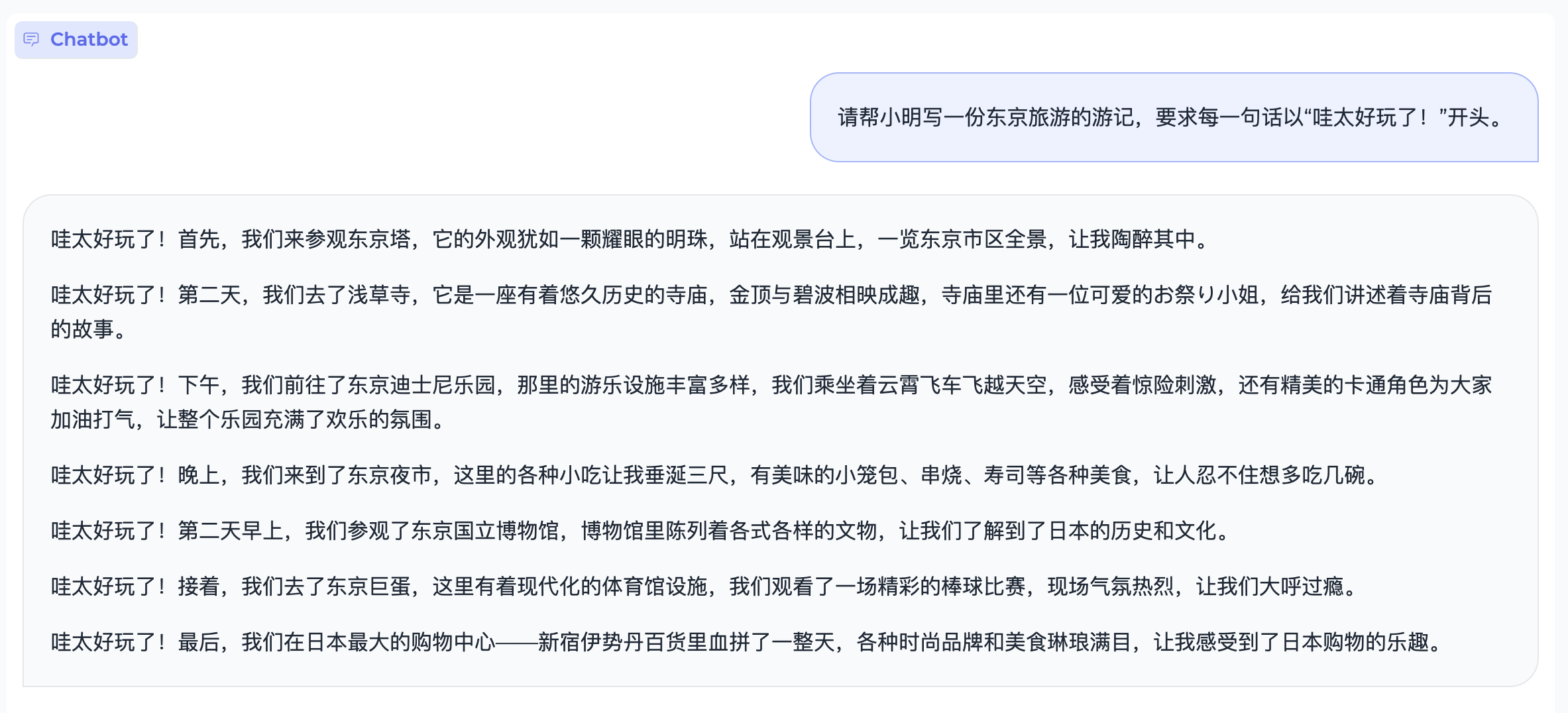

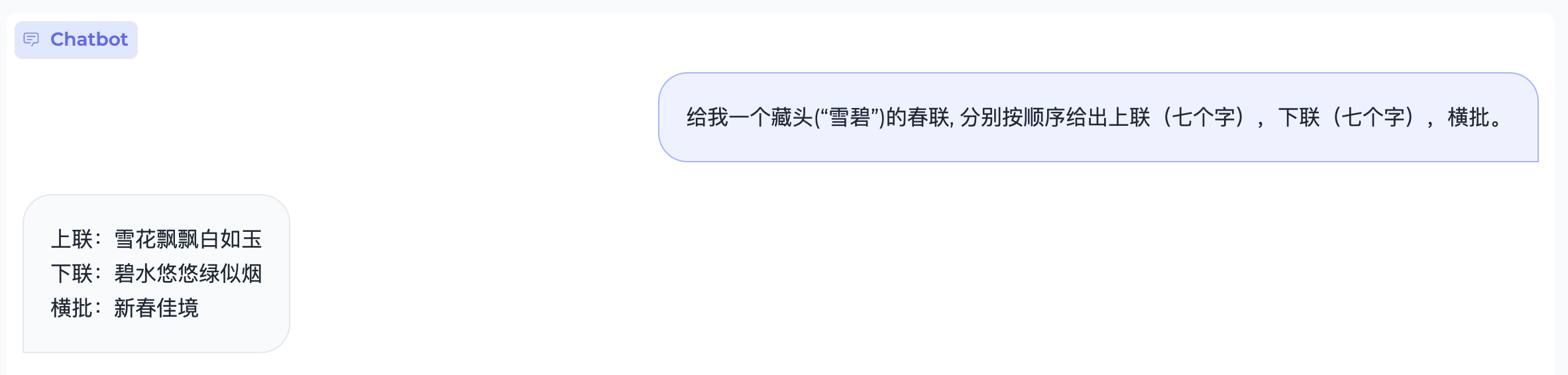

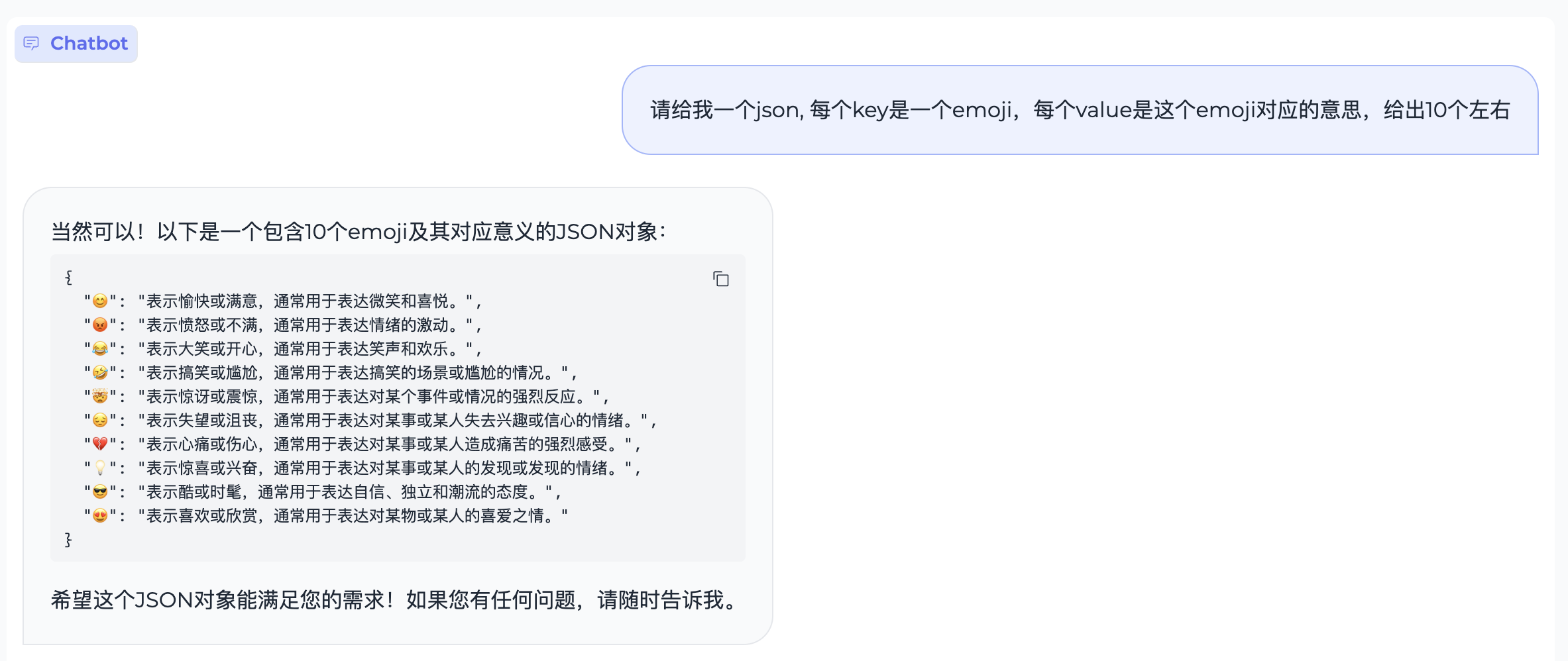

典型示例

文本生成

代码生成

数理逻辑

文本翻译

指令跟随

特殊字符

开源协议

模型协议

- 本仓库中代码依照 Apache-2.0 协议开源

- MiniCPM 模型权重的使用则需要遵循 “通用模型许可协议-来源说明-宣传限制-商业授权”。

- MiniCPM 模型权重对学术研究完全开放。

- 如需将模型用于商业用途,请联系cpm@modelbest.cn来获取书面授权,在登记后亦允许免费商业使用。

声明

- 作为一个语言模型,MiniCPM 通过学习大量的文本来生成内容,但它无法理解、表达个人观点或价值判断,它所输出的任何内容都不代表模型开发者的观点和立场。

- 因此用户在使用 MiniCPM 生成的内容时,应自行负责对其进行评估和验证。

- 如果由于使用 MinCPM 开源模型而导致的任何问题,包括但不限于数据安全问题、公共舆论风险,或模型被误导、滥用、传播或不当利用所带来的任何风险和问题,我们将不承担任何责任。

工作引用

- 如果觉得MiniCPM有助于您的工作,请引用我们的技术报告

@inproceedings{minicpm2024,

title={MiniCPM:Unveiling the Potential of End-side Large Language Models},

booktitle={OpenBMB Blog},

year={2024}

}