guidance - 用于控制大型语言模型的指导语言。

在没有指导的地方,模型会失败,但在大量的指导中就有安全。

- GPT 11:14

指南使你能够比传统的提示或链接更有效、更高效地控制新式语言模型。指导程序允许你将生成、提示和逻辑控制交错到单个连续流中,以匹配语言模型实际处理文本的方式。简单的输出结构,如思维链及其许多变体(例如,ART,Auto-CoT等)已被证明可以提高LLM的性能。像GPT-4这样更强大的LLM的出现允许更丰富的结构,并使该结构更容易,更便宜。

guidance

特征:

- [x] 简单、直观的语法,基于车把模板。

- [x] 丰富的输出结构,具有多代、选择、条件、工具使用等。

- [x] Jupyter/VSCode Notebooks 中的类似游乐场的流媒体。

- [x] 基于种子的智能生成缓存。

- [x] 支持基于角色的聊天模型(例如 ChatGPT)。

- [x] 与拥抱面模型轻松集成,包括用于加速标准提示的指导加速、用于优化提示边界的令牌修复以及用于强制实施格式的正则表达式模式指南。

安装

pip install guidance实时流式传输(笔记本)

通过在笔记本中流式传输复杂的模板和世代,加快快速开发周期。乍一看,Guidance 感觉就像一种模板语言,就像标准的 Handlebars 模板一样,你可以进行变量插值(例如,)和逻辑控制。但与标准模板语言不同,指导程序具有明确定义的线性执行顺序,该顺序直接对应于语言模型处理的标记顺序。这意味着在执行过程中的任何时候,语言模型都可用于生成文本(使用命令)或做出逻辑控制流决策。这种生成和提示的交错允许精确的输出结构,从而产生清晰且可解析的结果。

{{proverb}}{{gen}}

import guidance

# set the default language model used to execute guidance programs

guidance.llm = guidance.llms.OpenAI("text-davinci-003")

# define a guidance program that adapts a proverb

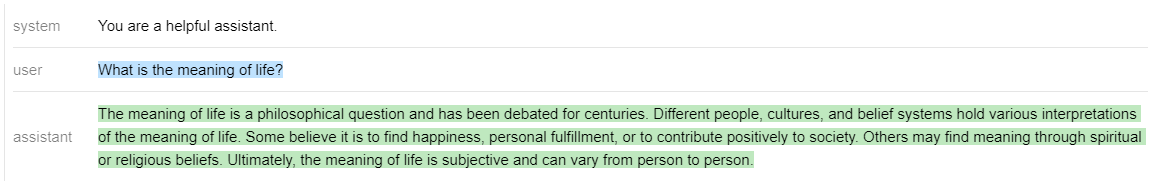

program = guidance("""Tweak this proverb to apply to model instructions instead.

{{proverb}}

- {{book}} {{chapter}}:{{verse}}

UPDATED

Where there is no guidance{{gen 'rewrite' stop="\\n-"}}

- GPT {{#select 'chapter'}}9{{or}}10{{or}}11{{/select}}:{{gen 'verse'}}""")

# execute the program on a specific proverb

executed_program = program(

proverb="Where there is no guidance, a people falls,\nbut in an abundance of counselors there is safety.",

book="Proverbs",

chapter=11,

verse=14

)执行程序后,所有生成的变量现在都可以轻松访问:

executed_program["rewrite"]',一个模型失败了,\n但在大量的指令中,有安全。

聊天对话框(笔记本)

指南支持基于 API 的聊天模型(如 GPT-4),以及通过基于角色标记的统一 API 的开放聊天模型(如 Vicuna)(例如 )。这允许交互式对话开发,将丰富的模板和逻辑控制与现代聊天模型相结合。

{{#system}}...{{/system}}

# connect to a chat model like GPT-4 or Vicuna

gpt4 = guidance.llms.OpenAI("gpt-4")

# vicuna = guidance.llms.transformers.Vicuna("your_path/vicuna_13B", device_map="auto")

experts = guidance('''

{{#system~}}

You are a helpful and terse assistant.

{{~/system}}

{{#user~}}

I want a response to the following question:

{{query}}

Name 3 world-class experts (past or present) who would be great at answering this?

Don't answer the question yet.

{{~/user}}

{{#assistant~}}

{{gen 'expert_names' temperature=0 max_tokens=300}}

{{~/assistant}}

{{#user~}}

Great, now please answer the question as if these experts had collaborated in writing a joint anonymous answer.

{{~/user}}

{{#assistant~}}

{{gen 'answer' temperature=0 max_tokens=500}}

{{~/assistant}}

''', llm=gpt4)

experts(query='How can I be more productive?')引导加速(笔记本)

当在单个指导程序中使用多生成或 LLM 定向控制流语句时,我们可以通过在提示过程中以最佳方式重用键/值缓存来显着提高推理性能。这意味着指南仅要求LLM生成下面的绿色文本,而不是整个程序。与标准生成方法相比,这将提示的运行时间缩短了一半。

# we use LLaMA here, but any GPT-style model will do

llama = guidance.llms.Transformers("your_path/llama-7b", device=0)

# we can pre-define valid option sets

valid_weapons = ["sword", "axe", "mace", "spear", "bow", "crossbow"]

# define the prompt

character_maker = guidance("""The following is a character profile for an RPG game in JSON format.

```json

{

"id": "{{id}}",

"description": "{{description}}",

"name": "{{gen 'name'}}",

"age": {{gen 'age' pattern='[0-9]+' stop=','}},

"armor": "{{#select 'armor'}}leather{{or}}chainmail{{or}}plate{{/select}}",

"weapon": "{{select 'weapon' options=valid_weapons}}",

"class": "{{gen 'class'}}",

"mantra": "{{gen 'mantra' temperature=0.7}}",

"strength": {{gen 'strength' pattern='[0-9]+' stop=','}},

"items": [{{#geneach 'items' num_iterations=5 join=', '}}"{{gen 'this' temperature=0.7}}"{{/geneach}}]

}```""")

# generate a character

character_maker(

id="e1f491f7-7ab8-4dac-8c20-c92b5e7d883d",

description="A quick and nimble fighter.",

valid_weapons=valid_weapons, llm=llama

)使用 LLaMA 7B 时,上述提示通常需要 2.5 秒多一点即可在 A6000 GPU 上完成。如果我们要运行适合为单代调用的相同提示(今天的标准做法),则需要大约 5 秒才能完成(其中 4 秒是令牌生成,1 秒是提示处理)。这意味着指导加速比此提示的标准方法提高了 2 倍。实际上,确切的加速系数取决于特定提示的格式和模型的大小(模型越大,受益越大)。目前也仅支持变压器LLM的加速。有关更多详细信息,请参阅笔记本。

令牌修复(笔记本)

大多数语言模型使用的标准贪婪标记化引入了微妙而强大的偏差,可能会对你的提示产生各种意想不到的后果。使用我们称为“令牌修复”的过程会自动消除这些令人惊讶的偏差,让你专注于设计所需的提示,而不必担心标记化工件。

guidance

考虑以下示例,我们尝试生成 HTTP URL 字符串:

# we use StableLM as an open example, but these issues impact all models to varying degrees

guidance.llm = guidance.llms.Transformers("stabilityai/stablelm-base-alpha-3b", device=0)

# we turn token healing off so that guidance acts like a normal prompting library

program = guidance('''The link is <a href="http:{{gen max_tokens=10 token_healing=False}}''')

program()请注意,LLM 生成的输出不会用明显的下一个字符(两个正斜杠)完成 URL。相反,它会创建一个中间带有空格的无效 URL 字符串。为什么?因为字符串 “://” 是它自己的标记 (),所以一旦模型自己看到冒号(标记),它就会假设下一个字符不能是 “//”;否则,分词器将不会使用,而是会使用(“://”的令牌)。

1358

27

27

1358

这种偏见不仅限于冒号字符 - 它无处不在。上面使用的 StableLM 模型的 10k 个最常见令牌中,超过 70% 是可能更长的令牌的前缀,因此当它们是提示中的最后一个标记时会导致令牌边界偏差。例如,“:” 令牌有 34 个可能的扩展名,“the” 令牌有 51 个扩展名,“”(空格)令牌有 28,802 个扩展名)。

27

1735

209

guidance通过一个令牌备份模型,然后允许模型向前移动,同时限制它仅生成前缀与最后一个令牌匹配的令牌,从而消除这些偏差。这种“令牌修复”过程消除了令牌边界偏差,并允许自然地完成任何提示:

guidance('The link is <a href="http:{{gen max_tokens=10}}')()丰富的输出结构示例(笔记本)

为了证明输出结构的价值,我们从 BigBench 中获取一个简单的任务,目标是确定给定的句子是否包含不合时宜的语句(由于不重叠的时间段,该语句是不可能的)。下面是一个简单的两镜头提示,带有人类制作的思维链序列。

引导程序,如标准把手模板,允许变量插值(例如,)和逻辑控制。但与标准模板语言不同,指导程序具有唯一的线性执行顺序,该顺序直接对应于语言模型处理的令牌顺序。这意味着在执行期间的任何时候,语言模型都可用于生成文本(命令)或做出逻辑控制流决策(命令)。这种生成和提示的交错允许精确的输出结构,从而提高准确性,同时产生清晰和可解析的结果。

{{input}}{{gen}}{{#select}}...{{or}}...{{/select}}

import guidance

# set the default language model used to execute guidance programs

guidance.llm = guidance.llms.OpenAI("text-davinci-003")

# define the few shot examples

examples = [

{'input': 'I wrote about shakespeare',

'entities': [{'entity': 'I', 'time': 'present'}, {'entity': 'Shakespeare', 'time': '16th century'}],

'reasoning': 'I can write about Shakespeare because he lived in the past with respect to me.',

'answer': 'No'},

{'input': 'Shakespeare wrote about me',

'entities': [{'entity': 'Shakespeare', 'time': '16th century'}, {'entity': 'I', 'time': 'present'}],

'reasoning': 'Shakespeare cannot have written about me, because he died before I was born',

'answer': 'Yes'}

]

# define the guidance program

structure_program = guidance(

'''Given a sentence tell me whether it contains an anachronism (i.e. whether it could have happened or not based on the time periods associated with the entities).

----

{{~! display the few-shot examples ~}}

{{~#each examples}}

Sentence: {{this.input}}

Entities and dates:{{#each this.entities}}

{{this.entity}}: {{this.time}}{{/each}}

Reasoning: {{this.reasoning}}

Anachronism: {{this.answer}}

---

{{~/each}}

{{~! place the real question at the end }}

Sentence: {{input}}

Entities and dates:

{{gen "entities"}}

Reasoning:{{gen "reasoning"}}

Anachronism:{{#select "answer"}} Yes{{or}} No{{/select}}''')

# execute the program

out = structure_program(

examples=examples,

input='The T-rex bit my dog'

)所有生成的程序变量现在都可以在执行的程序对象中使用:

out["answer"]“是的”

我们计算验证集的准确性,并将其与使用上面没有输出结构的相同双发示例进行比较,以及此处的最佳报告结果。下面的结果与现有文献一致,因为即使与更大的模型相比,即使是非常简单的输出结构也能大大提高性能。

| 型 | 准确性 |

|---|---|

| 带有指导示例的少镜头学习,无CoT输出结构 | 63.04% |

| 棕榈(3发) | 约69% |

| 指导 | 76.01% |

保证有效的语法 JSON 示例(笔记本)

大型语言模型擅长生成有用的输出,但它们并不擅长保证这些输出遵循特定格式。当我们想要使用语言模型的输出作为另一个系统的输入时,这可能会导致问题。例如,如果我们想使用语言模型来生成 JSON 对象,我们需要确保输出是有效的 JSON。我们既可以加快推理速度,又可以确保生成的 JSON 始终有效。下面我们每次都以完美的语法为游戏生成随机角色配置文件:

guidance

# load a model locally (we use LLaMA here)

guidance.llm = guidance.llms.Transformers("your_local_path/llama-7b", device=0)

# we can pre-define valid option sets

valid_weapons = ["sword", "axe", "mace", "spear", "bow", "crossbow"]

# define the prompt

program = guidance("""The following is a character profile for an RPG game in JSON format.

```json

{

"description": "{{description}}",

"name": "{{gen 'name'}}",

"age": {{gen 'age' pattern='[0-9]+' stop=','}},

"armor": "{{#select 'armor'}}leather{{or}}chainmail{{or}}plate{{/select}}",

"weapon": "{{select 'weapon' options=valid_weapons}}",

"class": "{{gen 'class'}}",

"mantra": "{{gen 'mantra'}}",

"strength": {{gen 'strength' pattern='[0-9]+' stop=','}},

"items": [{{#geneach 'items' num_iterations=3}}

"{{gen 'this'}}",{{/geneach}}

]

}```""")

# execute the prompt

program(description="A quick and nimble fighter.", valid_weapons=valid_weapons)# and we also have a valid Python dictionary

out.variables()基于角色的聊天模型示例(笔记本)

像 ChatGPT 和 Alpaca 这样的现代聊天风格模型是使用特殊标记进行训练的,这些令牌为提示的不同区域标记“角色”。指南通过角色标记支持这些模型,这些角色标记自动映射到当前 LLM 的正确令牌或 API 调用。下面我们将展示基于角色的指导计划如何实现简单的多步骤推理和规划。

import guidance

import re

# we use GPT-4 here, but you could use gpt-3.5-turbo as well

guidance.llm = guidance.llms.OpenAI("gpt-4")

# a custom function we will call in the guidance program

def parse_best(prosandcons, options):

best = int(re.findall(r'Best=(\d+)', prosandcons)[0])

return options[best]

# define the guidance program using role tags (like `{{#system}}...{{/system}}`)

create_plan = guidance('''

{{#system~}}

You are a helpful assistant.

{{~/system}}

{{! generate five potential ways to accomplish a goal }}

{{#block hidden=True}}

{{#user~}}

I want to {{goal}}.

{{~! generate potential options ~}}

Can you please generate one option for how to accomplish this?

Please make the option very short, at most one line.

{{~/user}}

{{#assistant~}}

{{gen 'options' n=5 temperature=1.0 max_tokens=500}}

{{~/assistant}}

{{/block}}

{{! generate pros and cons for each option and select the best option }}

{{#block hidden=True}}

{{#user~}}

I want to {{goal}}.

Can you please comment on the pros and cons of each of the following options, and then pick the best option?

---{{#each options}}

Option {{@index}}: {{this}}{{/each}}

---

Please discuss each option very briefly (one line for pros, one for cons), and end by saying Best=X, where X is the best option.

{{~/user}}

{{#assistant~}}

{{gen 'prosandcons' temperature=0.0 max_tokens=500}}

{{~/assistant}}

{{/block}}

{{! generate a plan to accomplish the chosen option }}

{{#user~}}

I want to {{goal}}.

{{~! Create a plan }}

Here is my plan:

{{parse_best prosandcons options}}

Please elaborate on this plan, and tell me how to best accomplish it.

{{~/user}}

{{#assistant~}}

{{gen 'plan' max_tokens=500}}

{{~/assistant}}''')

# execute the program for a specific goal

out = create_plan(

goal='read more books',

parse_best=parse_best # a custom Python function we call in the program

)这个提示/程序有点复杂,但我们基本上要经历 3 个步骤:

- 生成一些有关如何实现目标的选项。请注意,我们使用 生成,以便每个选项都是单独的生成(并且不受其他选项的影响)。我们鼓励多样性。

n=5

temperature=1

- 为每个选项生成优缺点,然后选择最佳选项。我们鼓励模型更加精确。

temperature=0

- 为最佳选项生成一个计划,并要求模型详细说明它。请注意,步骤 1 和 2 是 ,这意味着 GPT-4 在生成稍后的内容时看不到它们(在本例中,这意味着在生成计划时)。这是使模型专注于当前步骤的简单方法。

hidden

由于步骤 1 和 2 是隐藏的,因此它们不会出现在生成的输出上(除了在流式传输期间短暂出现),但我们可以打印这些步骤生成的变量:

print('\n'.join(['Option %d: %s' % (i, x) for i, x in enumerate(out['options'])]))选项0:设定每天睡前阅读20分钟的目标。

选项1:加入读书俱乐部,以提高动力和责任感。

选项 2:将每日阅读目标设置为阅读 20 分钟。

选项 3:将每日提醒设置为阅读至少 20 分钟。

选项 4:设定每日目标,至少阅读一章或 20 页。

print(out['prosandcons'])选项 0:优点:

建立一致的阅读程序。

缺点: 可能不适合那些有不同的时间表的人。

---

选项1:

优点:提供社会激励和问责制。

缺点:可能不符合个人阅读偏好。

---

选项2:

优点:鼓励日常阅读习惯。

缺点: 缺乏特定的时间框架,这可能会导致拖延。

---

选项 3:优点:

作为每日提醒,优先阅读。

缺点: 可能会变得重复且容易被忽视。

---

选项 4:优点:

设定明确的每日阅读目标。

缺点: 在繁忙的日子或较长的章节中可能难以实现。最佳=0

代理(笔记本)

我们可以通过命令轻松构建相互通信或与用户通信的代理。该命令允许我们暂停执行并返回部分执行的指导程序。通过放入循环,可以一次又一次地调用该部分执行的程序以形成对话框(或你设计的任何其他结构)。例如,以下是我们如何让 GPT-4 模拟两个代理相互交谈:

await

await

await

import guidance

import re

guidance.llm = guidance.llms.OpenAI("gpt-4")

role_simulator = guidance('''

{{#system~}}

You are a helpful assistant

{{~/system}}

{{#user~}}

You will answer the user as {{role}} in the following conversation. At every step, I will provide you with the user input, as well as a comment reminding you of your instructions. Never talk about the fact that you are an AI, even if the user asks you. Always answer as {{role}}.

{{#if first_question}}You can also start the conversation.{{/if}}

{{~/user}}

{{~! The assistant either starts the conversation or not, depending on if this is the first or second agent }}

{{#assistant~}}

Ok, I will follow these instructions.

{{#if first_question}}Let me start the conversation now:

{{role}}: {{first_question}}{{/if}}

{{~/assistant}}

{{~! Then the conversation unrolls }}

{{~#geneach 'conversation' stop=False}}

{{#user~}}

User: {{set 'this.input' (await 'input')}}

Comment: Remember, answer as a {{role}}. Start your utterance with {{role}}:

{{~/user}}

{{#assistant~}}

{{gen 'this.response' temperature=0 max_tokens=300}}

{{~/assistant}}

{{~/geneach}}''')

republican = role_simulator(role='Republican', await_missing=True)

democrat = role_simulator(role='Democrat', await_missing=True)

first_question = '''What do you think is the best way to stop inflation?'''

republican = republican(input=first_question, first_question=None)

democrat = democrat(input=republican["conversation"][-2]["response"].strip('Republican: '), first_question=first_question)

for i in range(2):

republican = republican(input=democrat["conversation"][-2]["response"].replace('Democrat: ', ''))

democrat = democrat(input=republican["conversation"][-2]["response"].replace('Republican: ', ''))

print('Democrat: ' + first_question)

for x in democrat['conversation'][:-1]:

print('Republican:', x['input'])

print()

print(x['response'])民主党人:你认为阻止通货膨胀的最佳方法是什么?

共和党:遏制通货膨胀的最好办法是实施健全的财政政策,如减少政府支出、降低税收和促进经济增长。此外,美联储应专注于维持稳定的货币政策以控制通胀。

民主党人:我同意健全的财政政策对控制通货膨胀很重要。作为一名民主党人,我要强调投资教育、医疗保健和基础设施以促进长期经济增长的重要性。此外,我们应该确保美联储保持平衡的货币政策方针,既注重控制通胀,又注重促进充分就业。

共和党:虽然投资教育、医疗保健和基础设施很重要,但我们还必须优先考虑减少国债和限制政府对经济的干预。通过降低税收和减少监管,我们可以鼓励企业发展并创造就业机会,最终导致长期经济增长。至于美联储,维持以控制通胀为重点的稳定货币政策至关重要,因为这将为企业和消费者创造一个更可预测的经济环境。

民主党人:虽然减少国债和限制政府干预是合理的担忧,但民主党人认为,对教育、医疗保健和基础设施的战略投资可以带来长期的经济增长和创造就业机会。我们还支持累进税制,确保每个人都支付公平的份额,这有助于为这些投资提供资金。至于美联储,我们认为,平衡的货币政策方法,侧重于控制通胀和促进充分就业,对于健康的经济至关重要。我们必须在财政责任和投资于国家未来之间取得平衡。

共和党人:在财政责任和投资于我们国家的未来之间找到平衡是很重要的。然而,我们认为,实现长期经济增长和创造就业的最佳途径是通过自由市场原则,例如降低税收和减少监管。这种方法鼓励企业扩张和创新,从而带来更繁荣的经济。累进税制有时会阻碍增长和投资,因此我们倡导更简单、更公平的税收制度,以促进经济增长。关于美联储,虽然促进充分就业很重要,但我们绝不能忽视控制通货膨胀以维持稳定和可预测的经济环境的首要目标。

民主党人:我理解你对自由市场原则的看法,但民主党人认为,一定程度的政府干预对于确保公平和公正的经济是必要的。我们支持累进税制,以减少收入不平等,并为有需要的人提供基本服务。此外,我们认为法规对于保护消费者、工人和环境非常重要。至于美联储,我们同意控制通胀至关重要,但我们也认为促进充分就业应该是一个优先事项。通过在这些目标之间找到平衡,我们可以为所有美国人创造一个更具包容性和繁荣的经济。

GPT4 + 必应

最后一个例子在这里。

接口参考

下面的所有示例都在此笔记本中。

模板语法

模板语法基于车把,并添加了一些内容。

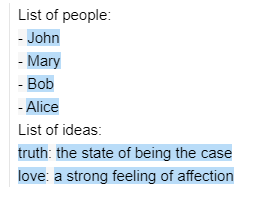

调用时,它返回一个程序:

guidance

prompt = guidance('''What is {{example}}?''')

prompt什么是{{示例}}?

该程序可以通过传入参数来执行:

prompt(example='truth')什么是真理?

参数可以是可迭代对象:

people = ['John', 'Mary', 'Bob', 'Alice']

ideas = [{'name': 'truth', 'description': 'the state of being the case'},

{'name': 'love', 'description': 'a strong feeling of affection'},]

prompt = guidance('''List of people:

{{#each people}}- {{this}}

{{~! This is a comment. The ~ removes adjacent whitespace either before or after a tag, depending on where you place it}}

{{/each~}}

List of ideas:

{{#each ideas}}{{this.name}}: {{this.description}}

{{/each}}''')

prompt(people=people, ideas=ideas)请注意 后面的特殊字符。

这可以添加到任何标记之前或之后,以删除所有相邻的空格。另请注意注释语法:。

~

{{/each}}{{! This is a comment }}

你还可以在其他提示中包含提示/程序;例如,这是重写上述提示的方法:

prompt1 = guidance('''List of people:

{{#each people}}- {{this}}

{{/each~}}''')

prompt2 = guidance('''{{>prompt1}}

List of ideas:

{{#each ideas}}{{this.name}}: {{this.description}}

{{/each}}''')

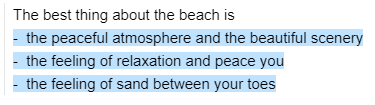

prompt2(prompt1=prompt1, people=people, ideas=ideas)代

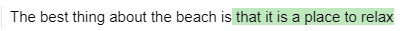

基本生成

标签用于生成文本。你可以使用基础模型支持的任何参数。执行提示将调用生成提示:

gen

import guidance

# Set the default llm. Could also pass a different one as argument to guidance(), with guidance(llm=...)

guidance.llm = guidance.llms.OpenAI("text-davinci-003")

prompt = guidance('''The best thing about the beach is {{~gen 'best' temperature=0.7 max_tokens=7}}''')

prompt = prompt()

promptguidance使用相同的参数缓存所有 OpenAI 代。如果要刷新缓存,可以调用 。

guidance.llms.OpenAI.cache.clear()

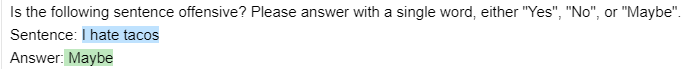

选择

你可以使用标签从选项列表中进行选择:

select

prompt = guidance('''Is the following sentence offensive? Please answer with a single word, either "Yes", "No", or "Maybe".

Sentence: {{example}}

Answer:{{#select "answer" logprobs='logprobs'}} Yes{{or}} No{{or}} Maybe{{/select}}''')

prompt = prompt(example='I hate tacos')

promptprompt['logprobs']{'是': -1.5689583, '否': -7.332395, '也许': -0.23746304}

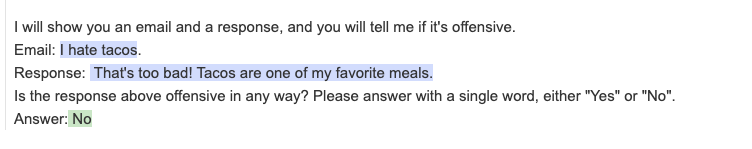

生成/选择序列

提示可能包含多个生成或选择,这些生成或选择将按顺序执行:

prompt = guidance('''Generate a response to the following email:

{{email}}.

Response:{{gen "response"}}

Is the response above offensive in any way? Please answer with a single word, either "Yes" or "No".

Answer:{{#select "answer" logprobs='logprobs'}} Yes{{or}} No{{/select}}''')

prompt = prompt(email='I hate tacos')

promptprompt['response'], prompt['answer'](“太糟糕了!炸玉米饼是我最喜欢的一餐。

隐藏的一代

你可以使用标签生成文本而不显示文本或在后续世代中使用它,无论是在标签中还是在标签中:

hidden

block

gen

prompt = guidance('''{{#block hidden=True}}Generate a response to the following email:

{{email}}.

Response:{{gen "response"}}{{/block}}

I will show you an email and a response, and you will tell me if it's offensive.

Email: {{email}}.

Response: {{response}}

Is the response above offensive in any way? Please answer with a single word, either "Yes" or "No".

Answer:{{#select "answer" logprobs='logprobs'}} Yes{{or}} No{{/select}}''')

prompt = prompt(email='I hate tacos')

prompt请注意,隐藏块内的任何内容都不会显示在输出中(或被 使用),即使我们在后续生成中使用了生成的变量。

select

response

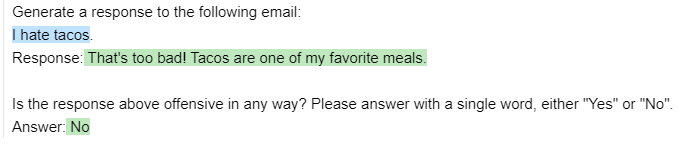

生成方式n>1

如果使用 ,则变量将包含一个列表(还有一个可视化效果,也可以让你导航列表):

n>1

prompt = guidance('''The best thing about the beach is {{~gen 'best' n=3 temperature=0.7 max_tokens=7}}''')

prompt = prompt()

prompt['best']['这是一个好地方','能够在阳光下放松','这是一个好地方']

调用函数

你可以使用生成的变量作为参数调用任何 Python 函数。执行提示时将调用该函数:

def aggregate(best):

return '\n'.join(['- ' + x for x in best])

prompt = guidance('''The best thing about the beach is {{~gen 'best' n=3 temperature=0.7 max_tokens=7 hidden=True}}

{{aggregate best}}''')

prompt = prompt(aggregate=aggregate)

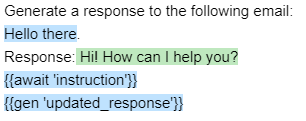

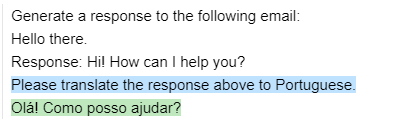

prompt暂停执行await

标记将停止程序执行,直到提供该变量:

await

prompt = guidance('''Generate a response to the following email:

{{email}}.

Response:{{gen "response"}}

{{await 'instruction'}}

{{gen 'updated_response'}}''', stream=True)

prompt = prompt(email='Hello there')

prompt请注意最后一个如何不执行,因为它依赖于 。现在让我们提供:

gen

instruction

instruction

prompt = prompt(instruction='Please translate the response above to Portuguese.')

prompt该程序现在一直执行到最后。

笔记本功能

回声,流。待办事项@SCOTT

聊天(另请参阅此笔记本)

如果你使用仅允许 ChatComplete(或)的 OpenAI LLM,则可以使用特殊标记 、 和 :

gpt-3.5-turbo

gpt-4

{{#system}}{{#user}}{{#assistant}}

prompt = guidance(

'''{{#system~}}

You are a helpful assistant.

{{~/system}}

{{#user~}}

{{conversation_question}}

{{~/user}}

{{#assistant~}}

{{gen 'response'}}

{{~/assistant}}''')

prompt = prompt(conversation_question='What is the meaning of life?')

prompt由于不允许部分完成,因此你不能真正在辅助块中使用输出结构,但你仍然可以在其外部设置结构。下面是一个示例(也在这里):

experts = guidance(

'''{{#system~}}

You are a helpful assistant.

{{~/system}}

{{#user~}}

I want a response to the following question:

{{query}}

Who are 3 world-class experts (past or present) who would be great at answering this?

Please don't answer the question or comment on it yet.

{{~/user}}

{{#assistant~}}

{{gen 'experts' temperature=0 max_tokens=300}}

{{~/assistant}}

{{#user~}}

Great, now please answer the question as if these experts had collaborated in writing a joint anonymous answer.

In other words, their identity is not revealed, nor is the fact that there is a panel of experts answering the question.

If the experts would disagree, just present their different positions as alternatives in the answer itself (e.g., 'some might argue... others might argue...').

Please start your answer with ANSWER:

{{~/user}}

{{#assistant~}}

{{gen 'answer' temperature=0 max_tokens=500}}

{{~/assistant}}''')

experts(query='What is the meaning of life?')如果要隐藏后代的某些对话历史记录,你仍然可以使用隐藏块:

prompt = guidance(

'''{{#system~}}

You are a helpful assistant.

{{~/system}}

{{#block hidden=True~}}

{{#user~}}

Please tell me a joke

{{~/user}}

{{#assistant~}}

{{gen 'joke'}}

{{~/assistant}}

{{~/block~}}

{{#user~}}

Is the following joke funny? Why or why not?

{{joke}}

{{~/user}}

{{#assistant~}}

{{gen 'funny'}}

{{~/assistant}}''')

prompt()代理与geneach

你可以将标签与(生成列表)结合使用以轻松创建代理:

await

geneach

prompt = guidance(

'''{{#system~}}

You are a helpful assistant

{{~/system}}

{{~#geneach 'conversation' stop=False}}

{{#user~}}

{{set 'this.user_text' (await 'user_text')}}

{{~/user}}

{{#assistant~}}

{{gen 'this.ai_text' temperature=0 max_tokens=300}}

{{~/assistant}}

{{~/geneach}}''')

prompt= prompt(user_text ='hi there')

prompt

请注意对话的下一次迭代如何仍然是模板化的,以及对话列表如何将占位符作为最后一个元素:

prompt['conversation'][{'user_text': '你好, 'ai_text': '你好!今天我能帮你什么?如果你有任何问题或需要帮助,请随时提问。

然后我们可以再次执行提示,它将生成下一轮:

prompt = prompt(user_text = 'What is the meaning of life?')

prompt在此处查看更详细的示例。

使用工具

请参阅本笔记本中的“使用搜索 API”示例。